Hosts File Site Blocking

Saturday 15th July 2017

Please see the associated GitHub repository for details on the hosts-file-integrity scripts.

I recently became interested in using the hosts file in order to block access to malicious and/or undesirable domains. This is similar to using an adblocker however it is applied system-wide, rather than just in your web browser. When using the hosts file, the request is blocked at a much lower level than when using an adblocking browser extension.

Skip to Section:

Hosts File Site Blocking + Hosts File Integrity Script ┣━━ What is the hosts file? ┣━━ Steven Black's Hosts File ┣━━ Hosts File Integrity Script ┣━━ Pseudo-Verification ┣━━ How do the scripts work? ┣━━ Dnsmasq ┣━━ iOS/Android ┗━━ Conclusion

What is the hosts file?

The hosts file is present on all modern systems, including Linux, Windows, Mac, iOS and Android. It contains mappings of hostnames to IP addresses, similar to DNS, however can be locally controlled by the user and usually takes priority over DNS.

This allows you to add local, manual overrides for DNS entries. In this case, it is used to redirect undesirable domain names to a null address, which essentially blocks them.

Example hosts file contents:

127.0.0.1 localhost 127.0.0.1 hostname 192.168.1.2 local-override-domain.tld 192.168.1.2 custom-extension-domain.qwertyuiop 0.0.0.0 blocked-website.tld 0.0.0.0 another-blocked-website.tld

Steven Black's Hosts File

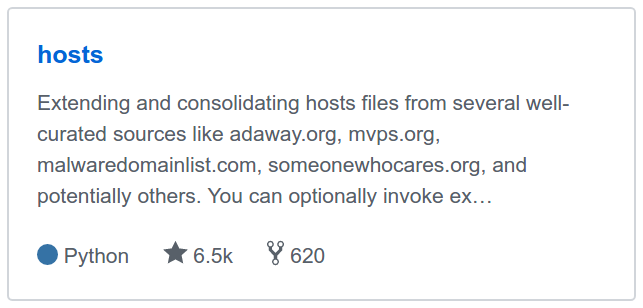

Steven Black on GitHub maintains a fantastic unified hosts file, containing data from many different reputable sources.

The hosts file contains a list of malicious, undesirable or inappropriate sites that should be blocked.

Multiple different variations of it are available and it is updated regularly.

Hosts File Integrity Script

I am now using one of Steven Black's hosts files on all of my systems, and I wrote a bash script that will automatically keep it up to date. The repository is available on my GitHub profile:

This is not designed to be a robust, plug and play solution. It is made specifically to work with one particular variation of Steven Black's hosts files. However, this script could be easily modified in order to work with another variation of the available hosts files or otherwise adapted for the needs of somebody else.

I am aware that Steven Black already provides several different scripts for keeping the file up to date on various different platforms, however I created an alternative for my own use that focuses more on security, automation and producing an output that is useful to me.

The script should be run on a regular basis, every 24 hours is ideal. It automatically checks for an update and if one is available, downloads it and pseudo-verifies it. A second script is also available that should be run as a user with write access to /etc/hosts. This second script will automatically implement the new hosts file by moving it to /etc/hosts.

All usage details for the script are available on the GitHub repository.

Pseudo-Verification

The "pseudo-verification" performed by this script refers to checking statistics and filtering out content that matches a specific regex pattern. The theory behind this is that all whitelisted/legitimate content is accounted for, leaving behind anything that shouldn't be there. This of course is not a perfect solution, however it is a good way to perform basic checks on the integrity of the file before putting it in place on your system.

This is a bit of a silly term that I have been using during this project, but does seem to be viable since it could detect things that cryptographic proof could not. For example, if the signing keys of a particular developer were compromised or they simply decided to act maliciously. A potentially malicious file signed with a trusted key would then be distributed out to everybody, and due to the trust of a cryptographic system, people are compromised. While this is a highly unlikely scenario, it is worth considering and I personally wouldn't even consider using an automatically updating hosts file without some form of verification.

Unfortunately Steven Black does not use signed commits when maintaining the GitHub repository. I know that I don't do this either, but for a project that is likely used by so many people with automatic updates, it would be nice to see.

How do the scripts work?

There are two scripts available in the GitHub repository: "update-verify-file.sh" and "verify-implement-file.sh".

The first script (update-verify-file.sh) should be run as a non-privileged user, and will check for an update, download it if one is available and pseudo-verify the file.

The second script (verify-implement-file.sh) should be run as a user with write access to /etc/hosts. This script will verify the latest version of the hosts file created by the first script and write it to /etc/hosts.

The scripts consist of various stages that must be completed successfully in order for the hosts file to be verified:

Note that the code snippets below only show the logic, not the full code which includes nicely formatted output, etc.

See the GitHub repository for the full code.

Checking/creating a lockfile: The script uses a lockfile in order to ensure that two instances of the script are never run at the same time. There is always a 3 second delay between creating a lockfile and the script continuing. This probably isn't required, however it may help to prevent problems when two instances of the script are run very closely to each other.

Checking network connectivity and access: There are 3 different checks that take place right at the start of the script, all of which must be passed before it continues. These checks are: ping, SSL connection and SSL certificate details. The ping check is the first basic network check:

githubping=$(ping -c 3 -W 2 raw.githubusercontent.com | grep -o "3 packets transmitted, 3 received, 0% packet loss, time ")

if [ "$githubping" == "3 packets transmitted, 3 received, 0% packet loss, time " ]; then

#ping check passed

verifications=$((verifications+1))

else

#ping check failed

exit

fi

Checking the SSL connection and certificate details are required in order to ensure that there is access to the real GitHub user content website, rather than something that may be intercepting it, such as a captive portal or locally-accepted root certificate.

githubssl=$(echo | openssl s_client -connect raw.githubusercontent.com:443 2>&1 | egrep "^( Verify return code: 0 \(ok\)|depth=0 C = US, ST = California, L = San Francisco, O = \"GitHub, Inc[.]\", CN = www[.]github[.]com)$")

if [[ $(echo "$githubssl" | tail -n 1) == " Verify return code: 0 (ok)" ]]; then

#ssl connection passed

verifications=$((verifications+1))

else

#ssl connection failed

exit

fi

if [[ $(echo "$githubssl" | head -n 1) == "depth=0 C = US, ST = California, L = San Francisco, O = \"GitHub, Inc.\", CN = www.github.com" ]]; then

#certificate details check passed

verifications=$((verifications+1))

else

#certificate details check failed

exit

fi

Another network check that I could have implemented would have been checking for a particular piece of content on the GitHub user content website. For example, downloading a known file and ensuring that it matches. I decided against this though since it would require some form of permanent, immutable file. I could have simply created a file in the repository for this but it doesn't really seem like the right thing to do.

Restoring the original file: At this point, a function is defined that will restore the original file should the file verification fail.

Checking for an update to the hosts file: The script gets the SHA256 hash of the live version and compares it to the local version. If the hashes do not match, it is assumed that an update is available.

livehash=$(curl -s "https://raw.githubusercontent.com/StevenBlack/hosts/master/alternates/REDACTED-REDACTED-REDACTED/host...

...s" | sha256sum | awk '{ print $1 }')

localhash=$(sha256sum latest.txt | awk '{ print $1 }')

if [ $livehash == $localhash ]; then

#no update available

exit

else

#update available, downloading update

mv latest.txt old.txt

curl -s "https://raw.githubusercontent.com/StevenBlack/hosts/master/alternates/REDACTED-REDACTED-REDACTED/hosts" > latest.txt

if [[ $(sha256sum latest.txt | awk '{ print $1 }') == $livehash ]]; then

#updated file hash matches

else

#updated file hash mismatch

mv old.txt latest.txt

exit

fi

fi

Whitelisted character check: Next, the newly downloaded file is checked against a whitelist of allowed characters.

if egrep -q "[^][a-zA-Z0-9 #:|=?+%&\*()_;/ @~'\"<>.,\\-]" latest.txt; then

#whitelist check failed

mv old.txt latest.txt

exit

else

#whitelist check passed

verifications=$((verifications+1))

fi

This returns all characters except for those in the list. If verification is successful, nothing will be returned. If something is returned, verification has failed at this stage.

At the time of writing, the whitelist check uses the regex pattern:

[^][a-zA-Z0-9 #:|=?+%&\*()_;/ @~'\"<>.,\\-]

Stripping allowed content from the file: Grep is used to strip content from the file that matches specific regex patterns. The idea behind this is that everything legitimate is accounted for, leaving behind anything that shouldn't be there.

#All 0.0.0.0 entries (including xn-- domains as well as erroneous entires)

grepstrip=$(egrep -v "^0.0.0.0 [a-zA-Z0-9._-]{,96}[.][a-z0-9-]{2,20}$" latest.txt)

#All lines starting with a hash (comments)

grepstrip2=$(echo "$grepstrip" | egrep -v "^#.*$")

#Blank lines

grepstrip=$(echo "$grepstrip2" | egrep -v "^$")

#Lines starting with spaces/multiple tabs

grepstrip2=$(echo "$grepstrip" | egrep -v "^( | {32}| | {4}| ? {5})# ")

#Remove default/system hosts file entries

grepstrip=$(echo "$grepstrip2" | egrep -v "^(127[.]0[.]0[.]1 localhost|127[.]0[.]0[.]1 localhost[.]localdomain|127[.]0[.]0[.]1 local|255[.]255[.]255[.]255 broadcasthost|::1 localhost|fe80::1%lo0 localhost|0[.]0[.]0[.]0 0[.]0[.]0[.]0)$")

#Remove bits left behind

grepstrip2=$(echo "$grepstrip" | egrep -v "^(0[.]0[.]0[.]0 REDACTED|0[.]0[.]0[.]0 REDACTED|0[.]0[.]0[.]0 REDACTED)$")

if [ "$grepstrip2" == "" ]; then

#all content stripped, check passed

verifications=$((verifications+1))

else

#some content remains, check failed

mv old.txt latest.txt

exit

fi

Checking the file byte and newline count: Checking the rough size of the file ensures that something significant was actually downloaded, rather than just a blank/missing file.

bytecount=$(wc -c latest.txt | awk '{ print $1 }')

if (( $bytecount > 1200000 )); then

#byte count check passed

verifications=$((verifications+1))

else

#byte count check failed

mv old.txt latest.txt

exit

fi

echo -n "- Checking file newline count: "

newlinecount=$(wc -l latest.txt | awk '{ print $1 }')

if (( $newlinecount > 50000 )); then

#new line count check passed

verifications=$((verifications+1))

else

#new line count check failed

mv old.txt latest.txt

exit

fi

Verification complete: If all verification checks were passed, the verification count should be 7. If this is the case, statistics of the file are output in order to produce a report for the user, such as hashes, timestamps and a diff between the new and old files. A backup is taken and the file also is modified to include the default entries for a Linux system. It is at this point that you could edit the script to add your own custom hosts entries that will be prepended to the file. A timestamp is also added as the first line of the file.

if [ $verifications == "7" ]; then

#file verification successful

mv current-hosts.txt backup-hosts.txt

egrep -v "^(127[.]0[.]0[.]1 localhost|127[.]0[.]0[.]1 localhost[.]localdomain|127[.]0[.]0[.]1 local|255[.]255[.]255[.]255 broadcasthost|::1 localhost|fe80::1%lo0 localhost|0[.]0[.]0[.]0 0[.]0[.]0[.]0)$" latest.txt > current-hosts.txt

filedate=$(date "+%a %d %b %Y - %r")

filehostname=$(hostname)

sed -i "1 i\#Updated: $filedate\n127.0.0.1 localhost\n127.0.0.1 $filehostname\n" current-hosts.txt

else

#Verification count != 7

mv old.txt latest.txt

exit

fi

Verify and implement hosts script: The second script that is available performs the same file verifications and then actually implements the script on your system by writing it to /etc/hosts. The script does not attempt to update the hosts file. This script needs to be run as a user that has write access to /etc/hosts.

Dnsmasq

If a system or application that you are using does not read the hosts file, does not support the usage of the hosts file, or allows DNS to take priority, Dnsmasq is one possible solution.

It is a DNS forwarder and DHCP server, however we are only interested in the DNS forwarding part of it in this case.

By configuring your system or application to use the DNS server provided by Dnsmasq, you can force the usage of the hosts file. This does not necessarily have to be on the same device as the Dnsmasq server, you could run a local DNS server for your network or even one that can be accessed remotely, and then configure all of your devices to use it.

iOS/Android

While iOS and Android have hosts file functionality, is it generally not accessible to the user unless the phone is jailbroken/rooted. There are workarounds for some versions of Android, however generally speaking it is not possible unless you are rooted.

In order to make use of your hosts file on a non-jailbroken/non-rooted device, you have two main options:

Host your own DNS server and use that on your device.

Use a VPN that forces clients to use a particular DNS server.

Both of these options are not too difficult to configure and are definitely worth it since it will allow you to block ads and other undesirable content on your mobile device.

Conclusion

Overall, the whole thing is a bit noddy, but it works well in my situation and I hope that somebody else will find it useful!